Fine Tuning Llama 2 A Step By Step Guide To Customizing The Large Language Model Datacamp

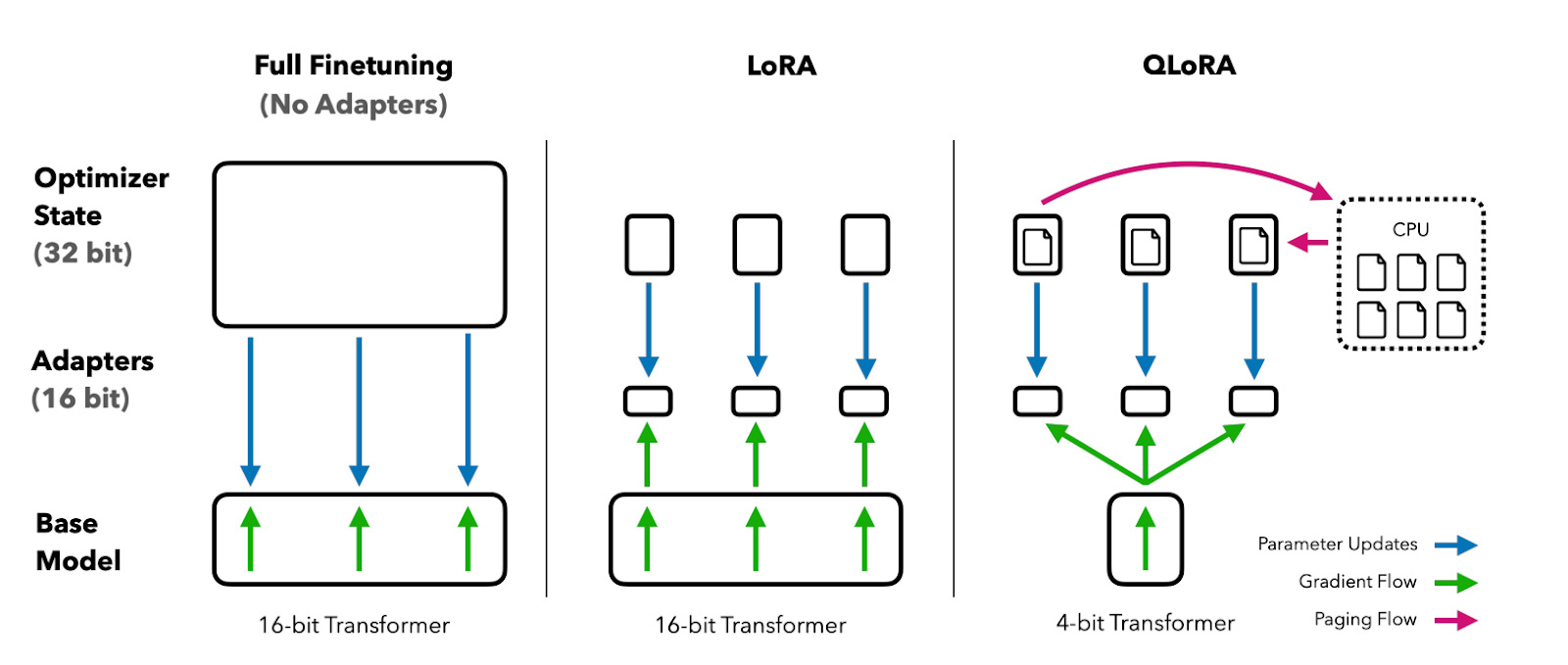

In this part we will learn about all the steps required to fine-tune the Llama 2 model with 7 billion parameters on a T4 GPU. How to Fine-Tune Llama 2 and Unlock its Full Potential Recently Meta AI introduced LLaMA2 the latest version of its open-source large language model framework created in. If you want to use more tokens you will need to fine-tune the model so that it supports longer sequences More information and examples on fine tuning can be found in the Llama Recipes. Torchrun --nnodes 1 --nproc_per_node 4 llama_finetuningpy --enable_fsdp --use_peft --peft_method lora --model_name path_to_model_directory7B -. In this blog we compare full-parameter fine-tuning with LoRA and answer questions around the strengths and weaknesses of the two techniques We train the Llama 2 models on the..

. Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7 billion to 70 billion parameters Below you can find and download LLama 2. No There is no way to run a Llama-2-70B chat model entirely on an 8 GB GPU alone File sizes memory sizes of Q2 quantization see below. Llama 2 offers a range of pre-trained and fine-tuned language models from 7B to a whopping 70B parameters with 40 more training data and an incredible 4k token context. Device fcudacudacurrent_device if cudais_available else cpu Set quantization configuration to load large..

. Llama 2 is broadly available to developers and licensees through a variety of hosting providers and on the. No trademark licenses are granted under this Agreement and in connection with the Llama Materials. The commercial limitation in paragraph 2 of LLAMA COMMUNITY LICENSE AGREEMENT is contrary to..

Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters. All three currently available Llama 2 model sizes 7B 13B 70B are trained on 2 trillion. Llama 2 - Meta AI This release includes model weights and starting code for pretrained and fine-tuned Llama language models Llama Chat Code Llama. The Llama 2 release introduces a family of pretrained and fine-tuned LLMs ranging in scale from 7B to 70B parameters 7B 13B 70B. Llama 2 models are available in three parameter sizes 7B 13B and 70B and come in both..

Fine Tuning Llama 2 A Step By Step Guide To Customizing The Large Language Model Datacamp

تعليقات